Databricks : Unity Catalog - First Step - Part 4 - Delta Sharing

We are going to discover the Delta Sharing functionality offered by Databricks with the Unity Catalog solution.

We are going to use a Databricks Account on AWS as a provider and a Databricks Account on Azure as a recipient for the sharing of objects with the Delta Sharing functionality for this discovery.

Note: Work is based on the state of the Unity Catalog solution at the end of Q1 2023 on AWS and Azure.

What’s Data Sharing

Data Sharing is a practice that consists in sharing data between different partners.

This can be for regulatory or contractual needs, but also to enhance and monetize data and products.

There is a very strong security and data governance issue when sharing data with partners or competitors in order to avoid any risk of data leakage and to guarantee the traceability of actions regarding the data usage.

We can use the Data Sharing when setting up a Data Mesh to share data from different products between different teams.

It is also common to set up a REST API interface to allow external partners to retrieve data by managing access rights directly through it, but there are solutions that greatly facilitate and secure this data sharing. The REST API interface is not necessarily the most efficient way to exchange large dataset between partners (for example in the context of a Data Lake or a Lakehouse).

Among the existing solutions, we are going to focus on the “Delta Sharing” functionality proposed by Databricks with the Unity Catalog solution in order to set up a data sharing with external partners.

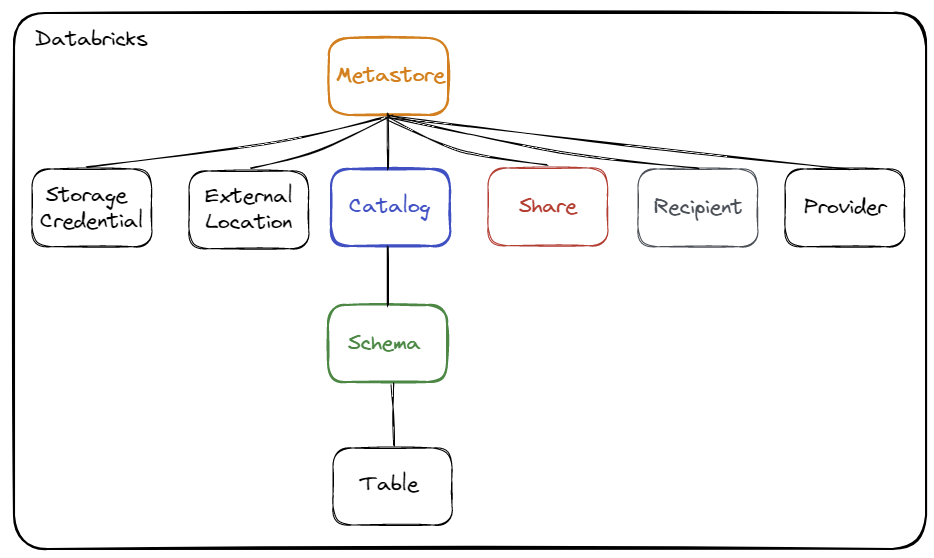

Unity Catalog and the object hierarchy

Unity Catalog is the Databricks solution that allows to have a unified and centralized governance for all the data managed by the Databricks resources as well as to secure and facilitate the management and the sharing of the data to all the internal and external actors of an organization.

You can get a more complete overview by reading the official documentation

Reminder concerning the hierarchy of objects :

The objects are :

- Storage Credential : This object is directly associated with the Metastore and is used to store access to a cloud provider (for example AWS S3) allowing the Unity Catalog solution to manage data rights

- External Location : This object is associated with the Metastore and allows you to store the path to a cloud provider (for example an AWS S3 resource) in combination with a Storage Credential to manage data access

- Metastore : Top-level object that can contain metadata

- Catalog : 1st level object allowing to organize data by Schema (also called Database)

- Schema : 2nd and last level object used to organize data (contains tables, views and functions)

- Table : Object used to define the structure and storage of data.

- Share : A logical grouping of the tables you want to share.

- Recipient : An object that identifies a partner (or group of users) who will have access to the shared data.

- Provider : An object that represents a partner who is sharing data.

When you want to access an object (for example a table), it will be necessary to use the name of the Catalog and the name of the Schema where the object is defined.

Example : select * from catalog.schema.table

Note: The concepts of Share, Recipient and Provider are specific to the Delta Sharing feature.

What’s the Delta Sharing feature

This functionality is based on an open protocol developed by Databricks to allow the sharing of data in a secure way between several platforms.

This functionality is based on the Unity Catalog solution in order to centralize data governance (catalog management and data rights).

The Delta Sharing Server is managed by the Unity Catalog solution and allows a recipient to have direct access to the data on the storage without going through a cluster or a SQL Warehouse

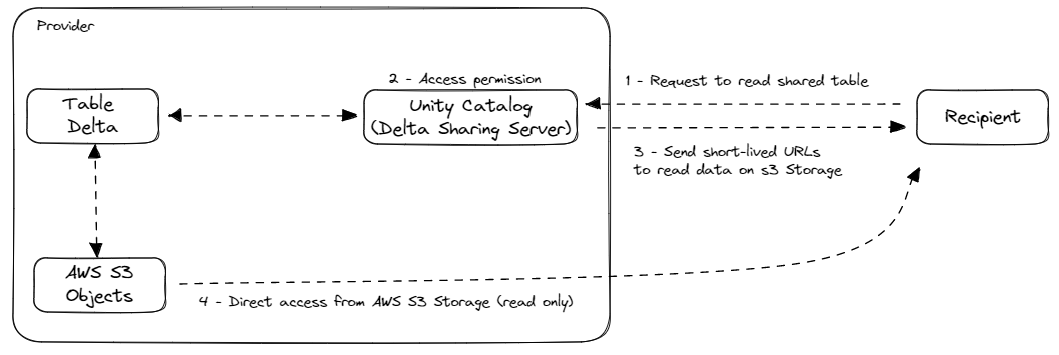

Diagram of how Delta Sharing works :

The operation is as follows:

The operation is as follows:

- The recipient requests the reading of a shared table

- The provider (with the Delta Sharing Server managed by Unity Catalog) will control access to the shared table

- The provider will send the access links (valid for a very short period) to the storage where the data is actually located (without going through a cluster or SQL Warehouse)

- The recipient will directly access the storage to retrieve the data from the shared table in read-only mode

The three main use cases, according to Databricks, for the use of this functionality :

- Internal Data Sharing (subsidiaries, teams)

- Create a Data Mesh to exchange data from different products between different subsidiaries, teams, applications

- External Data Sharing (companies)

- Share data with partners and suppliers, whether or not they are on the Databricks platform

- Data monetization

- Distribute and monetize your products (data) with customers who are or are not on the Databricks platform

Some benefits of this feature:

- A provider can easily define shares on a specific version or partition of a delta table

- Tables are shared live and can be updated by the provider only

- All clients that can read parquet data can access the shared data

- Data transfer relies on S3/ADLS/GCS resources only, therefore transfer is extremely efficient and inexpensive

The “Delta Sharing” feature can be used in three different ways:

- Open Sharing

- This allows any client not using Databricks with the Unity Catalog solution to access the shared data

- It is necessary to use a specific library to be able to read data through Delta Sharing

- The recipients of this type of sharing are based on the lifetime of a Databricks token (defined in the Unity Catalog Metastore of the supplier)

- Databricks-to-Databricks Sharing

- This corresponds to the sharing of data between two Metastores of the Unity Catalog solution (in the same Databricks Account or in different Databricks Accounts (AWS, Azure or GCP))

- One of the advantages of this type of sharing is that neither the Recipient nor the Provider needs to manage a Databricks token to access the shared data.

- The security of this sharing (including access verification, authentication and audit) is managed entirely through the Delta Sharing functionality and the Databricks platform.

- It is also possible to share read-only notebooks

- Marketplace:

- This corresponds to the fact of declaring oneself as a Databricks partner in order to be able to propose these shared data on the Databricks Marketplace. We are not going to develop this sharing method in the context of this discovery.

The objects specific to this functionality that have been implemented in the Unity Catalog solution are :

Provider: This defines the data provider for the recipientRecipient: This allows you to define the different recipients (users) to manage access rights on the shared dataShare: This is a collection of read-only tables and notebooks that allow data from a Metastore to be shared with recipients- The sharing of notebooks only concerns recipients who also use the Unity Catalog solution

Information about shares :

- This is a logical wrapper that allows you to group all the objects (delta tables and notebooks) that you want to share in read-only mode.

- Unity Catalog writes the information about the shares in the following storage directory (AWS S3):

s3://s3-dbx-metastore-uc/metastore-sandbox/<Metastore ID>/delta-sharing/shares/<Share ID>/* - It is possible to add all the tables of the Metastore in a share

- You only need to have

SELECTandUSErights on the objects you want to add in the share

- You only need to have

- Access rights to the share can only be given to the defined recipients

- The name of a share can be a name already used by a catalog or a schema (no restriction, but it is recommended to have a different name to facilitate the shares management)

- It is possible to share only Delta tables whether they are managed or external

- It is possible to define a shared partition based on the existing partitions of the Delta table to automatically limit data access according to the properties of each recipient

- Managing partitions at the share level can be extremely useful if you have defined partitions on information such as country, period or recipient ID to automatically limit access to data.

- This allows you to set constraints on partitions at the share level only once and to be able to set properties for each recipient (Recipient)

- It is possible to define whether the history of a Delta table is shared or not

- It is possible to define whether the Change Data Feed of a Delta table is shared or not

- It is possible to define a shared partition based on the existing partitions of the Delta table to automatically limit data access according to the properties of each recipient

- It is recommended to set up aliases for each object added to the share

- When no alias is defined for the schema name, the source schema name will be used

- When no alias is defined for the name of the schema and the name of the table then the name of the source schema and the name of the source table will be used

- It is not possible to update an alias after adding the object to the share. (It is necessary to remove and add the object again to modify the alias)

- Information specific to notebook sharing (in the case of Databricks-to-Databricks sharing only):

- When the notebook is shared, it is actually exported in HTML format to a directory in Unity Catalog’s managed storage (AWS S3) (specifically the

s3://s3-dbx-metastore-uc/metastore-sandbox/<Metastore ID>/delta-sharing/shares/<Share ID>/notebooks_files/*directory), which has the effect of keeping a specific version of the notebook that is to be shared. - When the notebook that has been shared is modified, this has no impact on the shared notebook. You have to remove it and add it again to the share to share the latest version of the notebook.

- When the notebook is shared, it is actually exported in HTML format to a directory in Unity Catalog’s managed storage (AWS S3) (specifically the

Information about recipients :

- This is an object at the Metastore level that allows you to define access for a partner/customer

- Read access to the share is managed on the recipient object

- A recipient can access all the shares in a Metastore according to the rights given by the share owners

- A recipient cannot access Metatore Unity Catalogs, only authorized shares.

- In order to create a recipient it is necessary to activate the Delta Sharing feature for the Metastore

- There are two types of recipient :

- Open Sharing : the recipient uses a Databricks token whose validity period is defined at the Metastore level

- Databricks-to-Databricks Sharing : the recipient uses a Metastore sharing ID (no validity period)

- Users of a Metastore cannot access shares of the same Metastore

Resources about some connectors allowing to access shared data without using a Databricks resource:

What are the Audit Logs

When you are a provider, it is essential to be able to track the use of your data by the recipients defined.

This can allow you to define billing rules when you want to monetize access to data, but also to simply track the use of shared objects.

Some examples of captured events in the Metastore of the Unity Catalog solution (not exhaustive):

- Create and Delete a share

- Create and Delete a recipient

- Request access from a recipient

- Execute a query on a shared object from a recipient

- Result (metadata) of the query on a shared object from a recipient

To do this, it is necessary to set up the storage of audit logs for events related to the Metastore of the Unity Catalog solution.

The storage of audit logs requires the implementation of a log configuration at the level of the Databricks account.

Some informations :

- Audit logs are not retrieved in real time, but every X minutes and stored in storage resource specified at the log configuration level in the Account Databricks.

- The audit log files are in JSON format.

- The audit log files for the Metastore Unity Catalog are stored in a subdirectory named

workspace=0.- When you will set up the log configuration, you must be careful not to filter the Databricks Workspace identifier or to take into account the Databricks Workspace identifier with the value

0otherwise you will not get the logs concerning the events related to the Unity Catalog Metastore.

- When you will set up the log configuration, you must be careful not to filter the Databricks Workspace identifier or to take into account the Databricks Workspace identifier with the value

- You will not be able to update or delete a log configuration at the Databricks Account level, you can only deactivate it and create a new one (even if you use the same name, the configuration identifier will be different with a unique identifier)

All the events captured at the account level and more specifically concerning Unity Catalog are traced in the audit log and can be analyzed. We have voluntarily limited our analysis to two types of actions for this demonstration.

You will find an exhaustive list of actions/events that you can analyze in the official documentation.

Set-up the environment

We are going to set up a set of elements allowing us to discover in more detail the Delta Sharing functionality of the Unity Catalog solution.

Context

Prerequisite:

- Existence of an AWS S3 resource named

s3-dbx-metastore-uc - Existence of an AWS S3 resource named

s3-demo-data-uc - Existence of a Metastore named

metastore-sandboxwith the Storage Credential namedsc-metastore-sandboxallowing to store the default data in the AWS S3 resource nameds3-dbx-metastore-uc - Existence of an AWS IAM role named

role-databricks-demo-data-ucand an AWS IAM policy namedpolicy-databricks-demo-data-ucallowing the global Databricks role to manage access to the AWS S3 resource nameds3-demo-data-uc - Existence of a Storage Credential named

sc-demo-data-ucand an External Location namedel_demo_data_ucallowing the global Databricks role to manage the access to the AWS S3 resource nameds3-demo-data-uc - Existence of the

grp_demogroup - Existence of the user

john.do.dbx@gmail.comin the groupgrp_demo - Existence of a SQL Warehouse with the right of use for the group

grp_demo

Setting up rights for the grp_demo group :

- Give the right to create catalogs at the Metastore level for the

grp_demogroup - Give the right to read external files from the External Location object named

el_demo_data_uc. - Give the right to create a share space at the Metastore level for the group

grp_demo. - Give the right to create a recipient at the Metastore level for the group

grp_demo.

1-- 1. Give Create Catalog right on Metastore to grp_demo

2GRANT CREATE CATALOG ON METASTORE TO grp_demo;

3

4-- 2. Give Read Files right on el_demo_data_uc location to grp_demo

5GRANT READ FILES ON EXTERNAL LOCATION `el_demo_data_uc` TO grp_demo;

6

7-- 3. Give Create share right on Metastore to grp_demo

8GRANT CREATE_SHARE ON METASTORE TO grp_demo;

9

10-- 4. Give Create recipient right on Metastore to grp_demo

11GRANT CREATE_RECIPIENT ON METASTORE TO grp_demo;

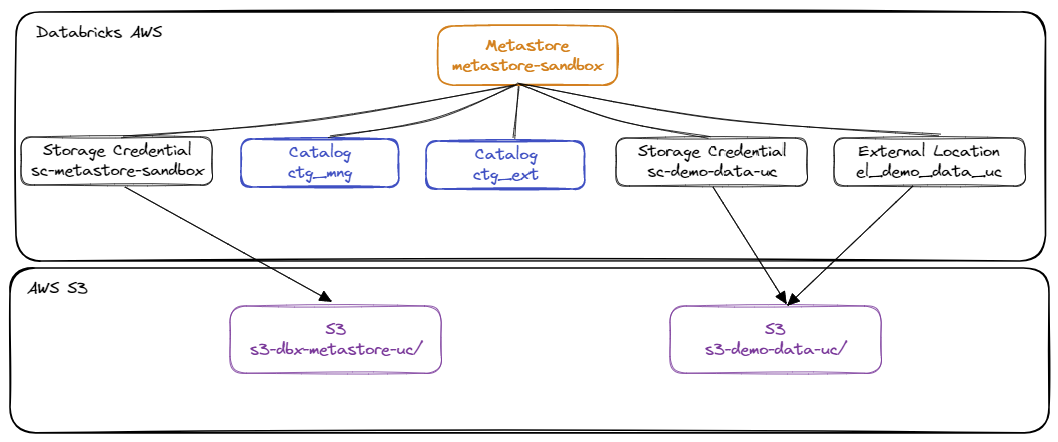

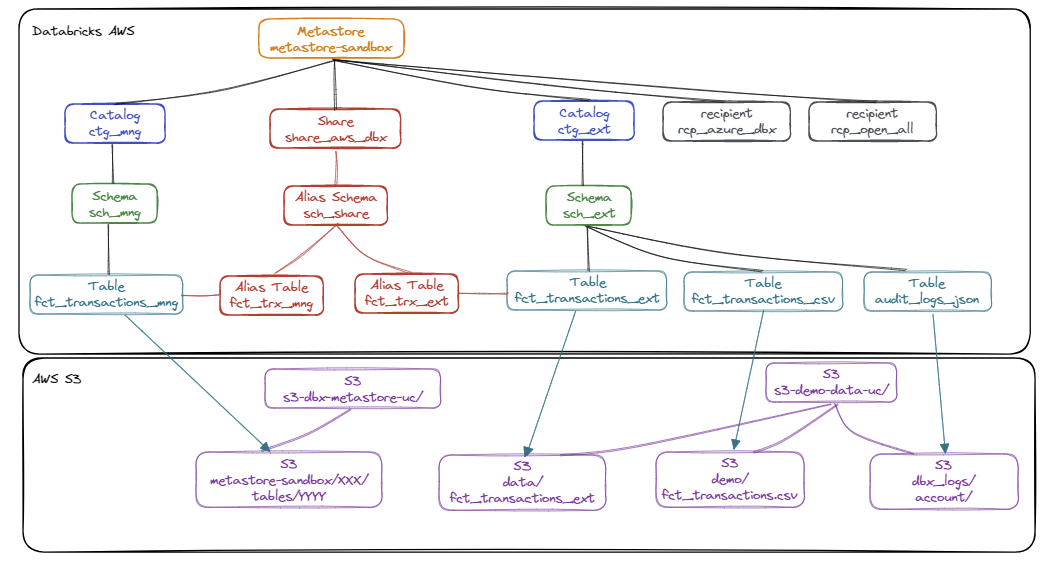

Diagram of the environment

Concerning the elements on AWS

Diagram of the objects in the Metastore Unity Catalog on AWS:

List of items:

List of items:

- Metastore

metastore-sandbox: Main metastore at the AWS Databricks Account level - Storage Credential

sc-metastore-sandbox: Login information to manage access rights to the AWS resource nameds3-dbx-metastore-uc. - Storage Credential

sc-demo-data-ucand External Locationel-demo-data-uc: Login information to manage access rights to the AWS resource nameds3-demo-data-uc. - Catalog

ctg_mng: Logical object to organize and manage managed data - Catalog

ctg_ext: Logical object to organize and manage external data

Diagram of all the objects that we will set up and use on the Unity Catalog Metastore on AWS:

List of items:

List of items:

- Catalog

ctg_mngand Schemasch_mng: Logical object to organize and manage managed data - Catalog

ctg_extand Schemasch_ext: Logical object to organize and manage external data - Table

fct_transactions_mng: Unity Catalog managed table containing transaction information and partitioned on theid_clientcolumn - Table

fct_transactions_ext: External table containing transaction information not partitioned but with the Change Data Feed enabled in the AWS S3 resource nameds3-demo-data-uc/data/fct_transactions_ext - Table

fct_transactions_csv: External table allowing direct access to the CSV source file of the transactions in the AWS S3 resource nameds3-demo-data-uc/demo/fct_transactions.csv. - Table

audit_logs_json: External table allowing direct access to all the audit log files based on the data of the AWS S3 resource nameds3_demo_data_uc/dbx_logs/account - Sharing

share_aws_dbx: Logical object to organize and manage shared objects- The

sch_shareschema Alias allows you to group objects from different catalogs and schemas into a single logical schema for data sharing - The

fct_trx_mngtable alias allows you to make data from thefct_transactions_mngtable available without having to communicate the table name - The Alias of the

fct_trx_exttable allows you to provide the data of thefct_transactions_exttable without having to communicate the table name

- The

- Recipient

rcp_azure_dbx: Object allowing users of the Metastore Unity Catalog Azure to access the data of theshare_aws_dbxshare. - Recipient

rcp_open_all: Object allowing users not using Metastore Unity Catalog to access data from theshare_aws_dbxshare

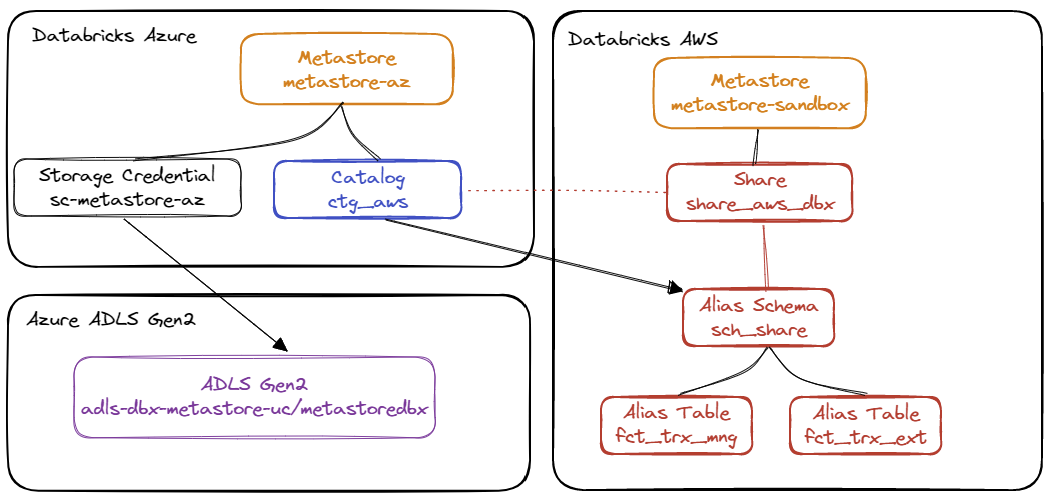

Concerning the elements on Azure:

Diagram of all the elements that we will implement and use on the Unity Catalog Metastore on Azure:

List of elements :

List of elements :

- Metastore

metastore-az: Main metastore at the Azure Databricks Account level - Storage Credential

sc-metastore-az: Login information to manage access rights to the Azure ADLS Gen2 resource namedadls-dbx-metastore-uc. - Catalog

ctg_aws: Logical object to synchronize with theshare_aws_dbxshare and access all elements of thesch_shareshared schema

Environment variable

In order to facilitate the implementation of the various elements throughout this discovery, we will set up some environment variables to use with the Databricks REST API tool.

1# Alias using the netrc file from databricks

2alias dbx-api='curl --netrc-file ~/.databricks/.netrc'

3

4########################################

5############# AWS ######################

6########################################

7# Url for Account Access

8export DBX_API_ACCOUNT_URL="https://accounts.cloud.databricks.com"

9# Url for Workspace Access

10export DBX_API_URL="<Workspace Databricks AWS URL>"

11# Account ID

12export DBX_ACCOUNT_ID="<Account Databricks ID>"

13# AWS Metastore Name

14export DBX_METASTORE_NAME="metastore-sandbox"

15# AWS Share Name

16export DBX_SHARE_NAME="share_aws_dbx"

17# AWS Provider Name

18export DBX_PROVIDER_NAME="dbx_aws_sharing"

19# AWS Recipient for Databricks to Databricks Sharing

20export DBX_RECIPIENT_DBX="rcp_azure_dbx"

21# AWS Recipient for Open Sharing

22export DBX_RECIPIENT_OPEN="rcp_open_all"

23

24

25########################################

26############# Azure ####################

27########################################

28# Name of the ADLS Gen2 Storage

29export AZ_ADLS2_NAME="adls-dbx-metastore-uc"

30# Name of the Container in the ADLS Gen2 Storage

31export AZ_ADLS2_CONTAINER_NAME="metastoredbx"

32# Name of the Resource Group

33export AZ_RG_NAME="<Azure Resource Group Name>"

34# Region

35export AZ_REGION="francecentral"

36# Tags

37export AZ_TAGS=("owner=john" "project=databricks" "TTL=7" "environment=demo")

38# Azure Databricks Access Connector Name

39export AZ_DBX_CONNECTOR_NAME="DBXAccessConnectorUC"

40# Azure Workspace Databricks URL

41export DBX_AZ_API_URL="<Azure Workspace Databricks URL>"

42# Azure Workspace Databricks ID

43export DBX_AZ_WORKSPACE_ID="<Azure Workspace Databricks ID>"

44# Azure Metastore Name

45export DBX_AZ_METASTORE_NAME="metastore-az"

46# Azure Storage Credential Name

47export DBX_AZ_METASTORE_SC_NAME="sc-metastore-az"

48# Azure Metastore ADLS Gen2 Path

49export DBX_AZ_ADLS2_METASTORE_PATH="${AZ_ADLS2_CONTAINER_NAME}@${AZ_ADLS2_NAME}.dfs.core.windows.net/${AZ_ADLS2_CONTAINER_NAME}"

Setting up a dataset on the AWS S3 resource

Content of the file fct_transactions.csv :

1id_trx,ts_trx,id_product,id_shop,id_client,quantity

21,2023-04-01 09:00:00,1,2,1,1

32,2023-04-01 11:00:00,1,1,1,3

43,2023-04-03 14:00:00,1,2,1,1

54,2023-04-05 08:00:00,3,1,2,9

65,2023-04-06 10:00:00,1,2,1,3

76,2023-04-06 12:00:00,2,2,1,1

87,2023-04-10 18:30:00,2,1,2,11

98,2023-04-10 18:30:00,3,1,2,2

Copying data to the demo directory of the AWS S3 resource named s3-demo-data-uc with the AWS CLI tool :

1aws s3 cp fct_transactions.csv s3://s3-demo-data-uc/demo/fct_transactions.csv

Setting up the objects on the Unity Catalog Metastore

Note : Using a user from grp_demo group

- Creation of catalogs

ctg_mng: Catalog to manage the elements managed by Unity Catalogctg_ext: Catalog to manage external elements

1-- Create Catalog (for managed data)

2CREATE CATALOG IF NOT EXISTS ctg_mn

3 COMMENT 'Catalog for managed data';

4

5-- Create Catalog (for external data)

6CREATE CATALOG IF NOT EXISTS ctg_ext

7 COMMENT 'Catalog for external data';

- Creation of schemas The list of schemas :

ctg_ext.sch_ext: Schema to manage the external elementsctg_mng.sch_mng: Schema to manage the elements managed by Unity Catalog

1-- Create Schema for external data

2CREATE SCHEMA IF NOT EXISTS ctg_ext.sch_ext

3 COMMENT 'Schema for external data';

4

5-- Create Schema for managed data

6CREATE SCHEMA IF NOT EXISTS ctg_mng.sch_mng

7 COMMENT 'Schema for managed Data';

- Creation of tables

1-- External table

2CREATE TABLE ctg_ext.sch_ext.fct_transactions_csv (

3 id_trx integer not null

4 ,ts_trx timestamp not null

5 ,id_product integer not null

6 ,id_shop integer not null

7 ,id_client integer not null

8 ,quantity integer not null

9)

10USING CSV

11OPTIONS (path "s3://s3-demo-data-uc/demo/fct_transactions.csv",

12 delimiter ",",

13 header "true")

14 ;

15

16

17-- External table with Change data feed activated

18CREATE TABLE ctg_ext.sch_ext.fct_transactions_ext (

19 id_trx integer not null

20 ,ts_trx timestamp not null

21 ,id_product integer not null

22 ,id_shop integer not null

23 ,id_client integer not null

24 ,quantity integer not null

25 ,ts_tech timestamp not null

26)

27LOCATION 's3://s3-demo-data-uc/data/fct_transactions_delta'

28COMMENT 'External Delta Table for Transaction Data'

29TBLPROPERTIES (delta.enableChangeDataFeed = true);

30

31

32-- Managed table with column partition (id_client)

33CREATE TABLE ctg_mng.sch_mng.fct_transactions_mng (

34 id_trx integer not null

35 ,ts_trx timestamp not null

36 ,id_product integer not null

37 ,id_shop integer not null

38 ,id_client integer not null

39 ,quantity integer not null

40 ,ts_tech timestamp not null

41)

42PARTITIONED BY (id_client)

43COMMENT 'Managed Data Table for Transaction Data'

44;

- Populate tables

1-- Truncate table

2DELETE FROM ctg_ext.sch_ext.fct_transactions_ext;

3DELETE FROM ctg_mng.sch_mng.fct_transactions_mng;

4

5-- Add data in the external table

6INSERT INTO ctg_ext.sch_ext.fct_transactions_ext (

7 id_trx

8 ,ts_trx

9 ,id_product

10 ,id_shop

11 ,id_client

12 ,quantity

13 ,ts_tech

14)

15SELECT id_trx

16 ,ts_trx

17 ,id_product

18 ,id_shop

19 ,id_client

20 ,quantity

21 ,current_timestamp() as ts_tech

22FROM ctg_ext.sch_ext.fct_transactions_csv;

23

24

25-- Add data in the managed table

26INSERT INTO ctg_mng.sch_mng.fct_transactions_mng (

27 id_trx

28 ,ts_trx

29 ,id_product

30 ,id_shop

31 ,id_client

32 ,quantity

33 ,ts_tech

34)

35SELECT id_trx

36 ,ts_trx

37 ,id_product

38 ,id_shop

39 ,id_client

40 ,quantity

41 ,current_timestamp() as ts_tech

42FROM ctg_ext.sch_ext.fct_transactions_csv;

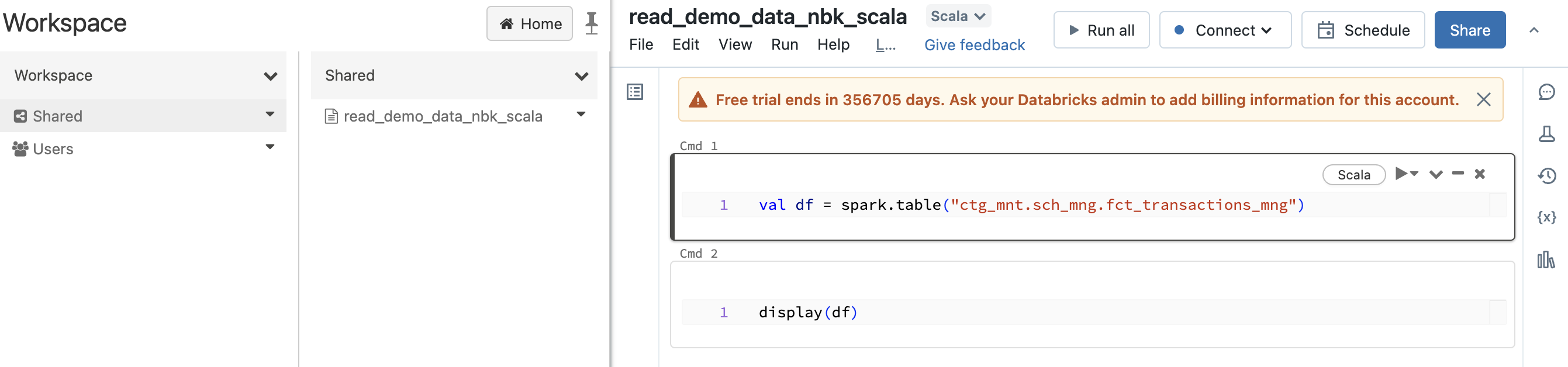

Creating a notebook to be able to share it

We will import a scala notebook named read_demo_data_nbk_scala into the Shared directory of the Databricks AWS Workspace with Databricks REST API :

1dbx-api -X POST ${DBX_API_URL}/api/2.0/workspace/import -H 'Content-Type: application/json' -d "{

2 \"path\": \"/Shared/read_demo_data_nbk_scala\",

3 \"content\": \"Ly8gRGF0YWJyaWNrcyBub3RlYm9vayBzb3VyY2UKdmFsIGRmID0gc3BhcmsudGFibGUoImN0Z19tbnQuc2NoX21uZy5mY3RfdHJhbnNhY3Rpb25zX21uZyIpCgovLyBDT01NQU5EIC0tLS0tLS0tLS0KCmRpc3BsYXkoZGYp\",

4 \"language\": \"SCALA\",

5 \"overwrite\": \"true\",

6 \"format\":\"SOURCE\"

7}"

Configuring the audit logs on the Metastore

We will start with the configuration of audit logs in order to be able to keep track of all events related to the Unity Catalog Metastore (for the Delta Sharing feature).

It is possible to capture all the events at the Databricks Account level or at the level of each Databricks Workspace. In our case, we are going to focus on the Databricks Account level because it is at this level that the events related to the Metastore Unity Catalog are captured.

For this demonstration, we will focus only on events concerning requests from recipients on shared objects, but the audit logs contain much more information.

Goals :

- We want to capture audit logs in AWS S3 resource named

s3-demo-data-uc/dbx_logs - We do not want to filter Workspace Databricks identifiers in order to capture all the audit logs offered by Databricks

You need to follow these steps: (based on the official documentation)

- Create an AWS IAM role and AWS IAM policy for Databricks to access (and write to) the AWS S3 resource named

s3-demo-data-uc/dbx_logs - Creation of a Databricks Credential at the Databricks Account level to store connection information (AWS IAM role created)

- Create a Storage Databricks at the Account Databricks level to store the path to the AWS S3 resource named

s3-demo-data-uc/dbx_logs - Creation of the log configuration at the Account Databricks level based on the Credential Databricks and the Storage Databricks

Activate the Delta Sharing feature on the Metastore

The management (creation and deletion) of shares does not require the activation of the Delta Sharing feature on the Metastore. Enabling the Delta Sharing feature is mandatory only when you want to manage recipients and configure access to shared objects.

To enable this feature, perform the following action:

- Update the Metastore configuration with the following information:

- The

delta_sharing_scopeinformation must be set toINTERNAL_AND_EXTERNALINTERNALvalue means the feature is disabled

- The

delta_sharing_recipient_token_lifetime_in_secondsinformation must be filled with the number of seconds of validity of the Databricks Token (for example with the value86400for one day)- The recipient using a Databricks Token will be able to access shared objects only during the validity period of the Databricks Token

- The

delta_sharing_organization_nameinformation must be filled with the name representing your organization as a provider (for example:dbx_aws_sharing)- This is the name that recipients, using Databricks, will see as the share provider

- The

Performing the action with Databricks REST API :

1# Get the Metastore ID (databricks)

2export TMP_DBX_METASTORE_ID=`dbx-api -X GET ${DBX_API_URL}/api/2.1/unity-catalog/metastores | jq -r '.metastores[]|select(.name==$ENV.DBX_METASTORE_NAME)|.metastore_id'`

3

4# Update the Metastore configuration to activate Delta Sharing (external)

5dbx-api -X PATCH ${DBX_API_URL}/api/2.1/unity-catalog/metastores/${TMP_DBX_METASTORE_ID} -H 'Content-Type: application/json' -d "{

6 \"delta_sharing_scope\": \"INTERNAL_AND_EXTERNAL\",

7 \"delta_sharing_recipient_token_lifetime_in_seconds\": \"86400\",

8 \"delta_sharing_organization_name\": \"${DBX_PROVIDER_NAME}\"

9}"

Create a share in the Metastore

Creating a share can be done with Databricks REST API or directly with SQL commands.

The creation of a share requires the following rights on the Metastore:

- Right to create share objects on the Metastore

- Right to use catalogs and schemas containing the data to be shared

- Right to read the delta tables containing the data to be shared

1GRANT CREATE_SHARE ON METASTORE TO grp_demo;

2GRANT USE, USE SCHEMA, SELECT ON CATALOG ctg_mng TO grp_demo;

3GRANT USE, USE SCHEMA, SELECT ON CATALOG ctg_ext TO grp_demo;

The creation of the share allows you to define all the data to be shared :

- Create the

share_aws_dbxshare at the Metastore Unity Catalog level - Add the table

ctg_ext.sch_ext.fct_transactions_extusing the aliassch_share.fct_trx_ext- Enable the Change Data Feed in the data sharing (option

cdf_enable: true) - Allow access from version n°0 of the data history (option

start_version: 0)

- Enable the Change Data Feed in the data sharing (option

- Add the table

ctg_mng.sch_mng.fct_transactions_mngusing the aliassch_share.fct_trx_mng- Allow access to only the latest version of data (no access to history) (option

history_data_sharing_status: false) - Add partition management to allow access to data only for the partition (id_client) which is equal to the value of the property named

ìd_clientassociated with the recipient when it was created. (Each recipient will have a different value for theid_clientproperty to highlight this access policy which cannot be bypassed by the recipient)

- Allow access to only the latest version of data (no access to history) (option

- Add the

/Shared/read_demo_data_nbk_scalanotebook in share manually (API didn’t work during our tests)

Using Databricks REST APIs :

1# 1. Create share

2dbx-api -X POST -H 'Content-Type: application/json' ${DBX_API_URL}/api/2.1/unity-catalog/shares -d "{\"name\": \"${DBX_SHARE_NAME}\", \"comment\": \"Share DBX AWS Data\"}"

3

4

5# 2. Add table ctg_ext.sch_ext.fct_transactions_ext

6dbx-api -X PATCH ${DBX_API_URL}/api/2.1/unity-catalog/shares/share_aws_dbx -H 'Content-Type: application/json' -d '{"updates": [

7 {"action": "ADD"

8 ,"data_object": {

9 "name": "ctg_ext.sch_ext.fct_transactions_ext",

10 "data_object_type": "TABLE",

11 "shared_as": "sch_share.fct_trx_ext",

12 "cdf_enabled": true,

13 "start_version": 0,

14 "status": "ACTIVE"

15 }

16 }

17 ]

18}'

19

20

21# 3. Add table ctg_mng.sch_mng.fct_transactions_mng

22dbx-api -X PATCH ${DBX_API_URL}/api/2.1/unity-catalog/shares/share_aws_dbx -H 'Content-Type: application/json' -d '{"updates": [

23 {"action": "ADD"

24 ,"data_object": {

25 "name": "ctg_mng.sch_mng.fct_transactions_mng",

26 "data_object_type": "TABLE",

27 "shared_as": "sch_share.fct_trx_mng",

28 "history_data_sharing_status": "DISABLED",

29 "status": "ACTIVE",

30 "partitions": [

31 {

32 "values": [

33 {

34 "name": "id_client",

35 "recipient_property_key": "id_client",

36 "op": "EQUAL"

37 }

38 ]

39 }

40 ]

41 }

42 }

43 ]

44}'

Using SQL commands :

1-- 1. Create share

2CREATE SHARE IF NOT EXISTS share_aws_dbx COMMENT 'Share DBX AWS Data';

3

4-- 2. Add table ctg_ext.sch_ext.fct_transactions_ext

5-- With alias sch_share.fct_trx_ext

6-- With Change Data Feed and Historical Data

7ALTER SHARE share_aws_dbx

8ADD TABLE ctg_ext.sch_ext.fct_transactions_ext

9 COMMENT 'Shared External Transactions data'

10 AS 'sch_share.fct_trx_ext'

11 WITH HISTORY;

12

13# 3. Add table ctg_mng.sch_mng.fct_transactions_mng

14-- With a specific rule on partition

15-- With alias sch_share.fct_trx_mng

16-- Without historical data (delta)

17ALTER SHARE share_aws_dbx

18ADD TABLE ctg_mng.sch_mng.fct_transactions_mng

19 COMMENT 'Shared Managed Transactions data'

20 AS 'sch_share.fct_trx_mng'

21 PARTITION (id_client = CURRENT_RECIPIENT().id_client)

22 WITHOUT HISTORY;

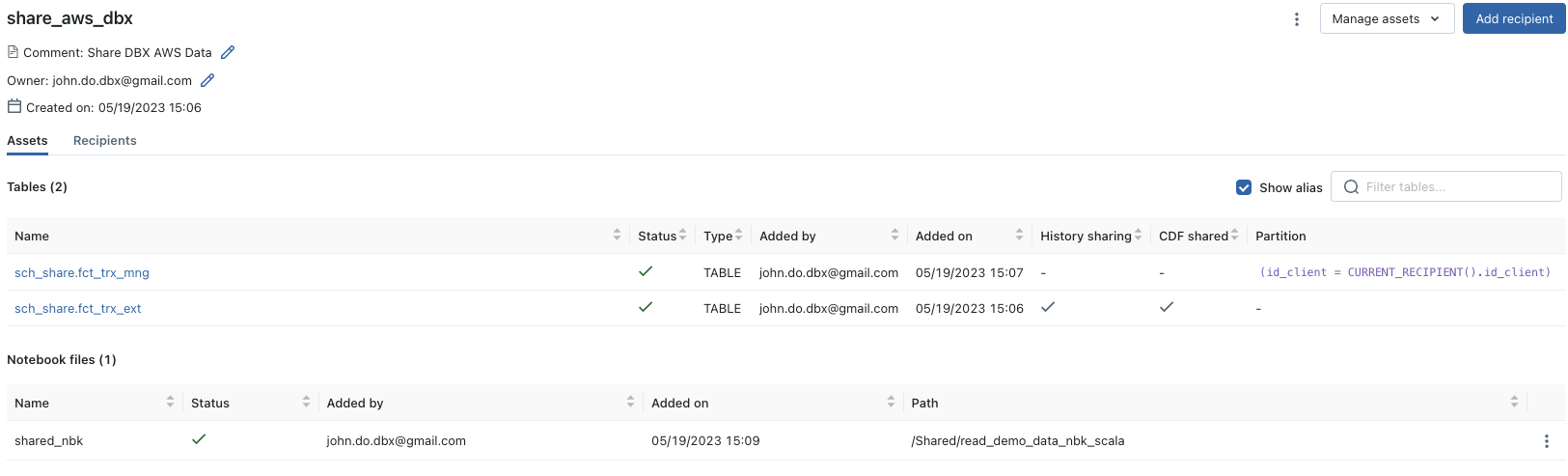

For step n°4 (adding the notebook in the Share), it is not possible to do it directly with SQL commands and we have not managed to do it using Databricks REST API, therefore we will do it manually by using Data Explorer:

- Go to

Workspace Databricks page > Data > Delta Sharing > Shared by me - Click on the desired share (Share)

share_aws_dbx - Click on

Manage assetsand select the optionAdd notebook file - Fill in the information

Notebook filewith the path and name of the file to share/Shared/read_demo_data_nbk_scala - Fill in the

Share asinformation with the name you want to display in the share for the notebookshared_nbk

Result of creating and adding elements in the Share:

Sharing data with a Unity Catalog Metastore on Azure

Sharing data between two Unity Catalog Metastores is called “Databricks-to-Databricks Sharing”.

To achieve this Databricks-to-Databricks sharing, we will use a Unity Catalog Metastore on a Databricks Azure Account in the France Central location. As a reminder, the main Unity Catalog Metastore (provider) is on a Databricks AWS Account and in the eu-west-1 (Ireland) location

Unity Catalog setup on Azure

To do this, we will start by setting up a Metastore on a Databricks Workspace on Azure.

Prerequisites:

- You must have installed the Azure CLI tool and configure the connection to Azure

- You must have created a Databricks Workspace in a Resource Group and your account must have Databricks Workspace administration rights.

- You must have the Databricks Azure Account administration rights

- This right can be given by an account with the “Azure AD Global Administrator” role by connecting to the Databricks Azure Account

- You must have the rights to create the various resources necessary in an Azure Group Resource

The steps required to set up a Unity Catalog Metastore on Azure are :

- Creation of a storage (ADLS Gen2 obligatorily) in the same region as the Metastore

- Creating a Databricks Access Connector

- Assign the Databricks Access Connector with the created storage (ALDLS Gen2)

- Creation of a Metastore

- Association of the Metastore with the Workspace Databricks

- Creation of a Credential Storage (for managing access rights)

- Association of Credential Storage with the Metastore

To speed up the process but keep an educational approach, we will use Azure CLI and Databricks REST API to perform the steps:

1# 1. Create the ADLS GEN 2 storage for the Metastore

2# BlobStorage Creation

3az storage account create --name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME} --access-tier Hot --kind StorageV2 --location ${AZ_REGION} --allow-blob-public-access false --sku Standard_LRS --tags ${AZ_TAGS}

4

5# Remove useless features (blobstorage)

6az storage account blob-service-properties show --account-name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME}

7az storage account blob-service-properties update --account-name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME} --enable-change-feed false --enable-delete-retention false --enable-last-access-tracking false --enable-restore-policy false --enable-versioning false

8

9# Remove useless features (filestorage)

10az storage account file-service-properties show --account-name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME}

11az storage account file-service-properties update --account-name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME} --enable-delete-retention false

12

13# Activate ADLS Gen2

14az storage account hns-migration start --type validation --name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME}

15az storage account hns-migration start --type upgrade --name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME}

16

17# Create a container in the ADLS Gen2 Storage

18export TMP_AZ_ADLS_KEY=`az storage account keys list --account-name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME} -o json | jq '.[0]|.value'`

19az storage container create --name ${AZ_ADLS2_CONTAINER_NAME} --account-name ${AZ_ADLS2_NAME} --account-key ${TMP_AZ_ADLS_KEY} --public-access off --fail-on-exist

20

21

22# 2. Create the databricks access-connector to manage access between Databricks and the ADLS2 Gen storage

23az databricks access-connector create --name ${AZ_DBX_CONNECTOR_NAME} --resource-group ${AZ_RG_NAME} --location ${AZ_REGION} --identity-type SystemAssigned --tags ${AZ_TAGS}

24

25

26# 3. Assign Storage Blob Data Contributor role to Databricks Access Connecter

27export TMP_AZ_ADLS_ID=`az storage account show --name ${AZ_ADLS2_NAME} --resource-group ${AZ_RG_NAME} | jq -r '.id'`

28export TMP_AZ_DBX_CONNECTOR_PRINCIPAL_ID=`az databricks access-connector show --resource-group ${AZ_RG_NAME} --name ${AZ_DBX_CONNECTOR_NAME} -o json | jq -r '.identity.principalId'`

29

30az role assignment create --assignee-object-id ${TMP_AZ_DBX_CONNECTOR_PRINCIPAL_ID} --assignee-principal-type ServicePrincipal --role "Storage Blob Data Contributor" --scope ${TMP_AZ_ADLS_ID}

31

32

33# 4. Create the Metastore in Unity Catalog

34dbx-api -X POST ${DBX_AZ_API_URL}/api/2.1/unity-catalog/metastores -H 'Content-Type: application/json' -d "{\"name\":\"${DBX_AZ_METASTORE_NAME}\", \"region\": \"${AZ_REGION}\", \"storage_root\":\"abfss://${DBX_AZ_ADLS2_METASTORE_PATH}\"}"

35

36# Get the Metastore ID

37export TMP_DBZ_AZ_METASTORE_ID=`dbx-api -X GET ${DBX_AZ_API_URL}/api/2.1/unity-catalog/metastores | jq -r '.metastores[]|select(.name==$ENV.DBX_AZ_METASTORE_NAME)|.metastore_id'`

38

39# 5. Assign the Metastore to the Workspace Databricks Azure

40# It's not possible to create the Storage Credential if the Metastore is not assigned to the Workspace Databricks

41dbx-api -X PUT ${DBX_AZ_API_URL}/api/2.1/unity-catalog/workspaces/${DBX_AZ_WORKSPACE_ID}/metastore -H 'Content-Type: application/json' -d "{\"metastore_id\":\"${TMP_DBZ_AZ_METASTORE_ID}\",\"default_catalog_name\":\"main\"}"

42

43# 6. Create the Storage Credential (for Unity Catalog)

44# Get the databricks access connector id

45export TMP_AZ_DBX_CONNECTOR_ID=`az databricks access-connector show --resource-group ${AZ_RG_NAME} --name ${AZ_DBX_CONNECTOR_NAME} -o json | jq -r '.id'`

46

47# Create the Storage Credential

48dbx-api -X POST -H 'Content-Type: application/json' ${DBX_AZ_API_URL}/api/2.0/unity-catalog/storage-credentials --data "{

49 \"name\": \"${DBX_AZ_METASTORE_SC_NAME}\",

50 \"comment\": \"storage credential for the Metastore\",

51 \"azure_managed_identity\": {

52 \"access_connector_id\": \"${TMP_AZ_DBX_CONNECTOR_ID}\"

53 }

54}"

55

56

57# 7. Assign the Storage Credential to the Metastore

58# Get the Storage Credential id

59export TMP_DBX_AZ_METASTORE_SC_ID=`dbx-api -X GET ${DBX_AZ_API_URL}/api/2.1/unity-catalog/storage-credentials | jq -r '.storage_credentials[]|select(.name==$ENV.DBX_AZ_METASTORE_SC_NAME)|.id'`

60

61# Update Metastore configuration with the Storage Credential

62dbx-api -X PATCH ${DBX_AZ_API_URL}/api/2.1/unity-catalog/metastores/${TMP_DBZ_AZ_METASTORE_ID} -H 'Content-Type: application/json' -d "{\"storage_root_credential_id\": \"${TMP_DBX_AZ_METASTORE_SC_ID}\"}"

If creating the Databricks Access Connector (step #2) does not work with the Azure CLI tool, you can do it manually by following this steps :

- Go to

Microsoft Azure page > Resource Group - Click on the desired resource group

- Click on

Create - Fill in the

Search the Marketplaceinformation with the valueaccess connector for azure databricks - Click on

Create > Access Connector for Azure Databricks - Click on

Create - Fill in the

Subscription,Resource group,NameandRegioninformation with the desired values - Click on

Review + create - Click on

Create

Note: Regarding the SQL Warehouse resource on Azure, a SQL Warehouse 2X-Small requires a value of at least 8 for the quota named Total Regional Spot vCPUs

Setting up a Databricks-to-Databricks recipient

In order to be able to share data with a recipient using a Unity Catalog Metastore, we need to perform the following actions:

- Request the Unity Catalog Metastore sharing identifier of the recipient

- Create a

rcp_azure_dbxrecipient on the Unity Catalog AWS Metastore of the provider using the shared identifier corresponding to the Unity Catalog Azure Metastore of the recipient - Give read rights to the recipient

rcp_azure_dbxon the elements of the share namedshare_aws_dbx

Details of the steps:

- Request the Unity Catalog Metastore sharing identifier of the recipient

The share identifier is a character string composed of the following information : <Metastore Cloud Provider>:<Metastore Region>:<Metastore ID>

There are several ways to get this identifier for the recipient:

- The 1st way (which is the simplest) is to connect to Worspace Databricks and execute the command

select current_metastore()on a SQL Warehouse (orspark.sql("select current_metastore()")in a notebook attached to a cluster with access rights to the Metastore Unity Catalog) - The 2nd way is using Databricks REST API

1# Get Metastore Sharing ID

2dbx-api -X GET ${DBX_AZ_API_URL}/api/2.1/unity-catalog/metastores | jq -r '.metastores[]|select(.name==$ENV.DBX_AZ_METASTORE_NAME)|.cloud+":"+.region+":"+.metastore_id'

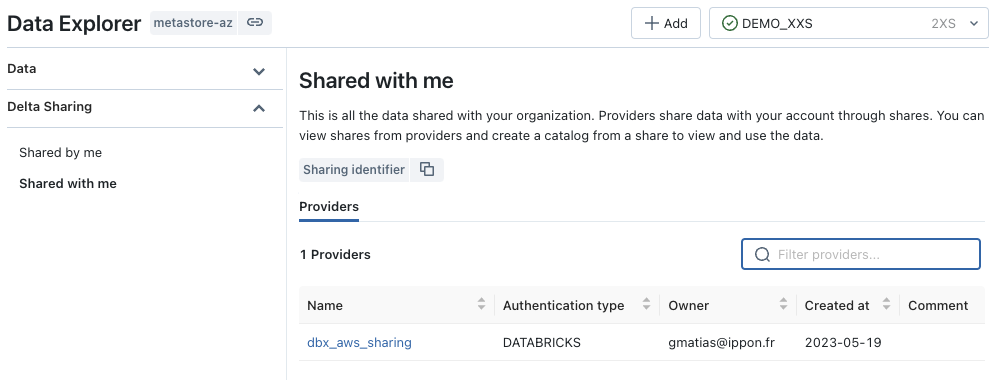

- Le 3ème moyen est d’utiliser l’outil Data Explorer

- Allez dans

Workspace Databricks page > Data > Delta Sharing > Shared with me - Cliquez sur

Copy sharing identifier

- Allez dans

Once the share identifier has been retrieved, it must be sent to the provider of the share.

- Create a

rcp_azure_dbxrecipient on the Unity Catalog AWS Metastore of the provider using the shared identifier corresponding to the Unity Catalog Azure Metastore of the recipient

Note: We will set up a id_client: 1 property to use the partitions of the shared object whose alias is sch_share.fct_trx_mng

The creation of a recipient requires the following rights on the Metastore:

1GRANT CREATE_RECIPIENT ON METASTORE TO grp_demo;

To create a recipient with Databricks REST API :

1# Get the Azure Metastore Sharing Identifier

2export TMP_AZ_METASTORE_SHARING_ID=`dbx-api -X GET ${DBX_AZ_API_URL}/api/2.1/unity-catalog/metastores | jq -r '.metastores[]|select(.name==$ENV.DBX_AZ_METASTORE_NAME)|.cloud+":"+.region+":"+.metastore_id'`

3

4# Create Recipient for Databricks-to-Databricks Share

5dbx-api -X POST ${DBX_API_URL}/api/2.1/unity-catalog/recipients -H 'Content-Type: application/json' -d "

6{

7 \"name\": \"${DBX_RECIPIENT_DBX}\",

8 \"authentication_type\": \"DATABRICKS\",

9 \"data_recipient_global_metastore_id\": \"${TMP_AZ_METASTORE_SHARING_ID}\",

10 \"properties_kvpairs\": {

11 \"properties\": {\"id_client\": \"1\"}

12 },

13 \"comment\" : \"Recipient Databricks (share data between databricks metastore)\"

14 }

15"

To create a recipient with SQL commands :

1-- A recipient created for Databricks to Databricks sharing with a id_client properties

2CREATE RECIPIENT rcp_azure_dbx

3USING ID '<Metastore Azure Sharing identifier>'

4PROPERTIES ( id_client = 1)

5;

6

7-- To get the detail of the recipient

8DESCRIBE RECIPIENT rcp_azure_dbx;

- Give read rights to the recipient

rcp_azure_dbxon the elements of the share namedshare_aws_dbx

1GRANT SELECT ON SHARE share_aws_dbx TO RECIPIENT rcp_azure_dbx;

From this last step, the rcp_azure_dbx recipient will be able to access the objects of the share using his Metastore Unity Catalog with his Workspace Databricks on Azure.

Note: It may take a few seconds or minutes to see the new provider named dbx_aws_sharing appear in the Metastore Unity Catalog on Azure after creating the recipient rcp_azure_dbx on AWS

The result should be the following:

Access through the Unity Catalog Metastore on Azure

Once the Databricks-to-Databricks share has been set up, an administrator of the Metastore Unity Catalog Azure must be able to create a catalog using the information from the share in order to be able to make the objects of the share accessible to Metastore users.

To do this, all you have to do is use the SQL commands to create a catalog and give the rights to use and read the catalog (and objects) as for any other catalog in the Metastore Unity Catalog.

The difference being that the catalog objects linked to the share can only be used in read-only mode and the data is stored in the AWS S3 resource of the provider and not in the Azure ADLS Gen2 resource of the recipient.

Creation of the catalog with an SQL command :

1CREATE CATALOG IF NOT EXISTS ctg_aws

2USING SHARE `dbx_aws_sharing`.share_aws_dbx

3COMMENT 'Shared data from AWS Metastore'

4;

Note: When setting up Databricks-to-Databricks sharing, there is no need to share connection information between the two Metastores. We only used a sharing identifier linked to the Metastore of the recipient, which has the effect of simplifying and securing exchanges and setting up the share.

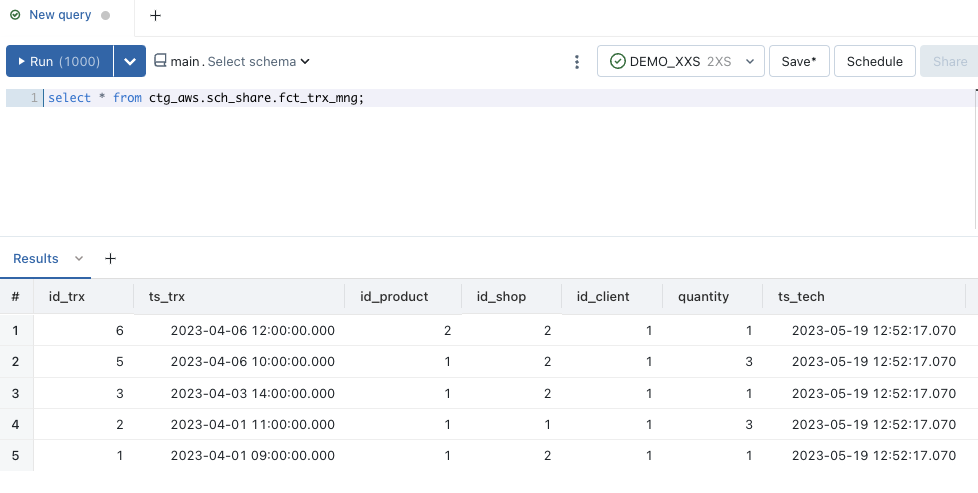

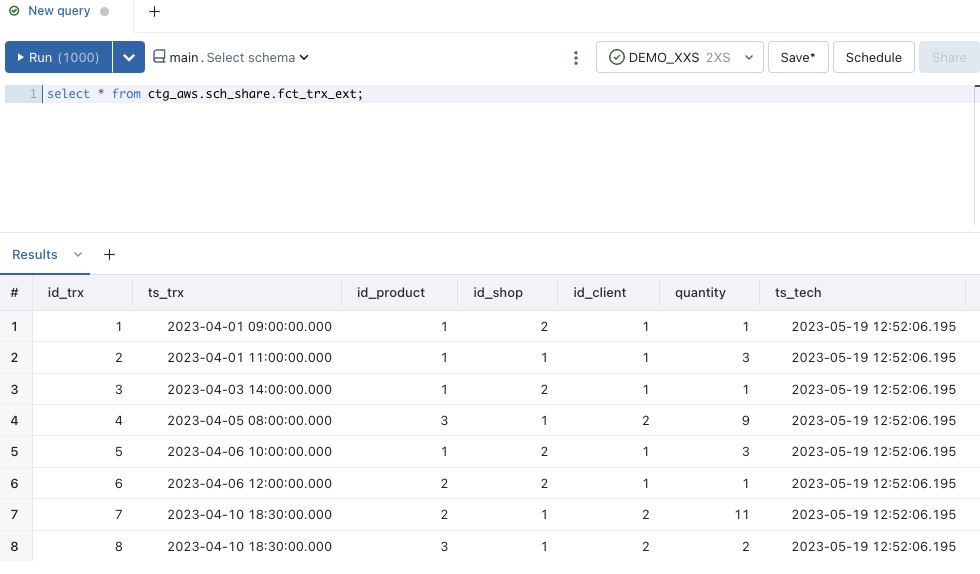

Example of data access from a query on a SQL Warehouse via the Azure Workspace Databricks :

When we access the

sch_share.fct_trx_mngobject, we only see the information whoseid_clientcolumn is equal to the value1because the recipient only has the right to access to this data partition as defined by the provider when creating the recipient

When we access the

sch_share.fct_trx_extobject, we can see all the data.

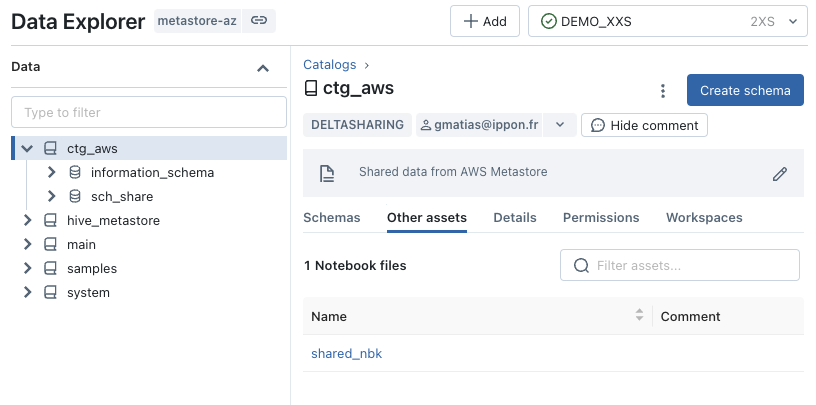

Regarding access to the shared notebook:

To access the shared_nbk notebook, use the Data Explorer tool:

- Go to

Workspace Databricks page > Data - Select Catalog (from Shared Objects)

- Click on the

Other assetstab - Click on the desired notebook

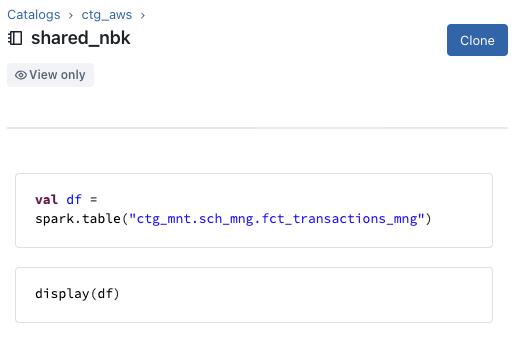

Note: You will only be able to see the cells of the notebook in read-only HTML format and you will be able to clone the notebook into your Workspace Databricks.

Visualization of the list of notebooks shared with the Data Explorer tool

Visualization of the shared_nbk notebook with the Data Explorer tool

Reading audit logs

To access the audit logs more easily, we will create an external table named ctg_ext.sch_ext.audit_logs_json based on the s3://s3-demo-data-uc/dbx_logs/account directory which contains all log files in JSON format with information on events related to the Databricks Account (and to the different Databricks Workspaces).

We will focus only on the deltaSharingQueriedTable and deltaSharingQueryTable actions at the Databricks Account level regarding the rcp_azure_dbx recipient to highlight some information that we can easily retrieve from the audit logs to track access to shared objects.

The steps are :

- Create the external table

ctg_ext.sch_ext.audit_logs_jsonto easily access the JSON files of the AWS S3 resource nameds3://s3-demo-data-uc/dbx_logs/account - Retrieve information about

deltaSharingQueryTableactions from thercp_azure_dbxrecipient - Retrieving

deltaSharingQueriedTableaction information from thercp_azure_dbxrecipient

1-- 1. Create ctg_ext.sch_ext.audit_logs_json from JSON files

2CREATE TABLE ctg_ext.sch_ext.audit_logs_json

3USING JSON

4OPTIONS (path "s3://s3-demo-data-uc/dbx_logs/account");

5

6

7-- 2. Get deltaSharingQueryTable action informations from rcp_azure_dbx recipient

8select requestId

9,requestParams.recipient_name

10,requestParams.share

11,requestParams.schema

12,requestParams.name

13,requestParams.user_agent

14,response.statusCode

15,serviceName

16,sourceIPAddress

17,date

18from ctg_ext.sch_ext.audit_logs_json

19where actionName ='deltaSharingQueryTable'

20and auditLevel = 'ACCOUNT_LEVEL'

21and requestParams.recipient_name in ('rcp_azure_dbx')

22order by timestamp asc

23

24

25-- 3. Get deltaSharingQueriedTable action informations from rcp_azure_dbx recipient

26select requestId

27,requestParams.recipient_name

28,response.result:numRecords

29,response.result:tableName

30,response.result:deltaSharingPartitionFilteringAccessed

31,serviceName

32,sourceIPAddress

33,userAgent

34from ctg_ext.sch_ext.audit_logs_json

35where actionName ='deltaSharingQueriedTable'

36and auditLevel = 'ACCOUNT_LEVEL'

37and requestParams.recipient_name in ('rcp_azure_dbx')

38order by timestamp asc

Result of query n°2 for deltaSharingQueryTable actions :

1requestId,recipient_name,share,schema,name,user_agent,statusCode,serviceName,sourceIPAddress

2CgsI3ceeowYQhsLgAzoQSHf2V13IQFqodUiz6jKNJw==,rcp_azure_dbx,share_aws_dbx,sch_share,fct_trx_mng,Delta-Sharing-Unity-Catalog-Databricks-Auth/1.0 Linux/5.4.0-1107-azure-fips OpenJDK_64-Bit_Server_VM/11.0.18+10-jvmci-22.3-b13 java/11.0.18 scala/2.12.15 java_vendor/GraalVM_Community,200,unityCatalog,

3CgsI78eeowYQvvjMFjoQvIDzt07UQ3mzAJR99KJGpg==,rcp_azure_dbx,share_aws_dbx,sch_share,fct_trx_ext,Delta-Sharing-Unity-Catalog-Databricks-Auth/1.0 Linux/5.4.0-1107-azure-fips OpenJDK_64-Bit_Server_VM/11.0.18+10-jvmci-22.3-b13 java/11.0.18 scala/2.12.15 java_vendor/GraalVM_Community,200,unityCatalog,

This query allows us to access information from query requests executed on shared objects (recipient name, share name, schema name, table name, etc…)

Result of query n°3 for deltaSharingQueriedTable actions :

1requestId,recipient_name,numRecords,tableName,deltaSharingPartitionFilteringAccessed,serviceName,sourceIPAddress,userAgent

2d8140290-4a65-44a2-aac0-31c7feaf4aac,rcp_azure_dbx,5,fct_trx_mng,true,unityCatalog,,Delta-Sharing-Unity-Catalog-Databricks-Auth/1.0 Linux/5.4.0-1107-azure-fips OpenJDK_64-Bit_Server_VM/11.0.18+10-jvmci-22.3-b13 java/11.0.18 scala/2.12.15 java_vendor/GraalVM_Community

3854c0522-aaca-465c-8f7d-1684a003e8e1,rcp_azure_dbx,8,fct_trx_ext,false,unityCatalog,,Delta-Sharing-Unity-Catalog-Databricks-Auth/1.0 Linux/5.4.0-1107-azure-fips OpenJDK_64-Bit_Server_VM/11.0.18+10-jvmci-22.3-b13 java/11.0.18 scala/2.12.15 java_vendor/GraalVM_Community

This query allows us to access the information of the result of the queries executed on the shared objects.

We can see that the query on the fct_trx_mng table has the deltaSharingPartitionFilteringAccessed option enabled and returns only 3 rows (which corresponds to the filter on the partitions) and that the query on the fct_trx_ext table returns 8 rows.

We can also have other information such as the ip address, the agent of the tool that executed the request (Databricks in our case) and many more informations.

Data sharing in Open Sharing

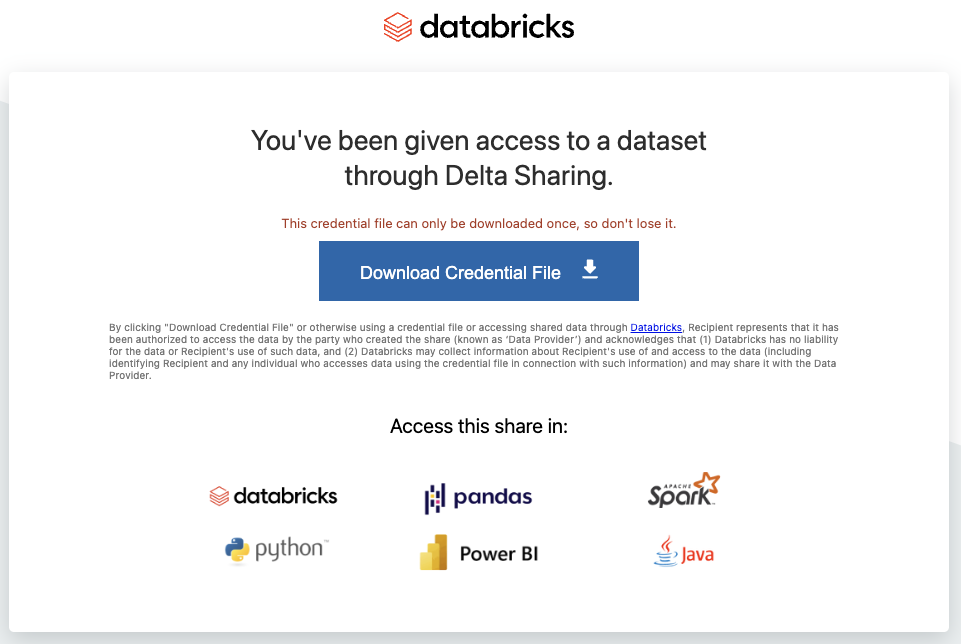

In order to be able to share the data with a recipient not using Metastore Unity Catalog (named Open Sharing), we must perform the following actions :

- Create the

rcp_open_allrecipient based on a Databricks Token (which will have a lifetime defined at the Metastore level) - Give read rights to the

rcp_open_allrecipient on the objects of theshare_aws_dbxshare - Retrieve the activation URL to send to the

rcp_open_allrecipient - The recipient must connect to the given URL and download the configuration file named

config.shareto be able to connect to theshare_aws_dbxshare

Details of the steps:

- Create the

rcp_open_allrecipient based on a Databricks Token

Note: We will set up a id_client: 2 property to use the partitions of the shared object whose alias is sch_share.fct_trx_mng

The creation of a recipient requires the following rights on the Metastore:

1GRANT CREATE_RECIPIENT ON METASTORE TO 'grp_demo';

To create a recipient with Databricks REST API :

1# Create Recipient for Token Open Sharing

2dbx-api -X POST ${DBX_API_URL}/api/2.1/unity-catalog/recipients -H 'Content-Type: application/json' -d "

3{

4 \"name\": \"${DBX_RECIPIENT_OPEN}\",

5 \"authentication_type\": \"TOKEN\",

6 \"properties_kvpairs\": {

7 \"properties\": {\"id_client\": \"2\"}

8 },

9 \"comment\": \"Give access to shared data for external tools\"

10 }

11"

To create a recipient with SQL commands :

1-- A recipient created for sharing outside of Databricks with a id_client properties

2CREATE RECIPIENT rcp_open_all

3COMMENT 'Give access to shared data for external tools'

4PROPERTIES ( id_client = 2)

5;

- Give read rights to the

rcp_open_allrecipient on the objects of theshare_aws_dbxshare

1GRANT SELECT ON SHARE share_aws_dbx TO RECIPIENT rcp_open_all;

- Retrieve the activation URL to send to the

rcp_open_allrecipient The URL retrieval can be done with a SQL command (by the provider) :

1-- Get the activation url (activation_link parameter)

2DESCRIBE RECIPIENT rcp_open_all;

3-- activation_link : https://ireland.cloud.databricks.com/delta_sharing/retrieve_config.html?XXXXXXXXXXXXX

The URL retrieval can be done with the Databricks REST API (by the provider) :

1dbx-api -X GET ${DBX_API_URL}/api/2.1/unity-catalog/recipients/${DBX_RECIPIENT_OPEN} | jq -r '.tokens[0].activation_url'

- The recipient must connect to the given URL and download the configuration file named

config.shareto be able to connect to theshare_aws_dbxshare

Warning: Whatever the method used, the retrieve of connection information can only be done once. This is managed by Databricks.

It is possible to retrieve the information with Databricks REST API:

Note: writing connection information in the file ~/config.share

1# Get the URL Activation Code (from Metastore Unity CAtalog AWS)

2export TMP_DBX_RECIPIENT_OPEN_URL=`dbx-api -X GET ${DBX_API_URL}/api/2.1/unity-catalog/recipients/${DBX_RECIPIENT_OPEN} | jq '.tokens[0].activation_url' | sed "s/.*?//" | sed 's/"//'`

3

4# Extract information to write the config.share file (instead of download from databricks activation page) from Public API

5dbx-api -X GET ${DBX_API_URL}/api/2.1/unity-catalog/public/data_sharing_activation/${TMP_DBX_RECIPIENT_OPEN_URL} > ~/config.share

For manual retrieval of config.share connection information, simply access the provided activation URL:

Information :

- You will only be able to access the activation URL and the objects of the share during the validity period of the Token Databricks

- It is possible to access the activation URL as many times as you want (during the validity period) but the download of the configuration file

config.shareis only possible once- It is through this configuration file

config.sharethat you will be able to access shared objects

- It is through this configuration file

- It is possible to rotate Token Databricks to re-enable access to shared objects, but this requires getting a new configuration file

config.share(the keys will be different)

Example of a rotation of Databricks Token (if for example the activation could not be done during the initial validity period) with Databricks REST API :

1# If you need to rotate the Databricks Token

2dbx-api -X POST ${DBX_API_URL}/api/2.1/unity-catalog/recipients/${DBX_RECIPIENT_OPEN}/rotate-token -H 'Content-Type: application/json' -d '{

3"existing_token_expire_in_seconds": 0

4}'

From step n°4, the recipient can access the objects (delta tables only) of the share using the desired tool depending on the delta-sharing connectors available (python, java, sparks, etc …)

Access by python script

Prerequisites: the configuration file config.share has been retrieved locally ~/config.share

Installation of the delta-sharing python library proposed by Databricks

1pip install delta-sharing

Example of a python script to display the contents of shared delta tables :

1import delta_sharing

2from tabulate import tabulate

3

4# Define the profile file (config.share)

5profile_file = "~/config.share"

6

7# Define the parameter to read data

8read_share = "share_aws_dbx"

9read_schema = "sch_share"

10read_table_mng = "fct_trx_mng"

11read_table_ext = "fct_trx_ext"

12

13# Get the data from shared table fct_trx_mng

14df_pandas_mng = delta_sharing.load_as_pandas("{0}#{1}.{2}.{3}".format(profile_file,read_share,read_schema,read_table_mng))

15print("Table : [{0}.{1}.{2}]".format(read_share,read_schema,read_table_mng))

16print(tabulate(df_pandas_mng, headers = 'keys', tablefmt = 'pretty'))

17

18

19# Get the data from shared table fct_trx_ext

20df_pandas_ext = delta_sharing.load_as_pandas("{0}#{1}.{2}.{3}".format(profile_file,read_share,read_schema,read_table_ext))

21print("Table : [{0}.{1}.{2}]".format(read_share,read_schema,read_table_ext))

22print(tabulate(df_pandas_ext, headers = 'keys', tablefmt = 'pretty'))

Script result :

1# Result :

2Table : [share_aws_dbx.sch_share.fct_trx_mng]

3+---+--------+---------------------+------------+---------+-----------+----------+----------------------------+

4| | id_trx | ts_trx | id_product | id_shop | id_client | quantity | ts_tech |

5+---+--------+---------------------+------------+---------+-----------+----------+----------------------------+

6| 0 | 8 | 2023-04-10 18:30:00 | 3 | 1 | 2 | 2 | 2023-05-19 12:52:17.070000 |

7| 1 | 7 | 2023-04-10 18:30:00 | 2 | 1 | 2 | 11 | 2023-05-19 12:52:17.070000 |

8| 2 | 4 | 2023-04-05 08:00:00 | 3 | 1 | 2 | 9 | 2023-05-19 12:52:17.070000 |

9+---+--------+---------------------+------------+---------+-----------+----------+----------------------------+

10

11Table : [share_aws_dbx.sch_share.fct_trx_ext]

12+---+--------+---------------------+------------+---------+-----------+----------+----------------------------+

13| | id_trx | ts_trx | id_product | id_shop | id_client | quantity | ts_tech |

14+---+--------+---------------------+------------+---------+-----------+----------+----------------------------+

15| 0 | 1 | 2023-04-01 09:00:00 | 1 | 2 | 1 | 1 | 2023-05-19 12:52:06.195000 |

16| 1 | 2 | 2023-04-01 11:00:00 | 1 | 1 | 1 | 3 | 2023-05-19 12:52:06.195000 |

17| 2 | 3 | 2023-04-03 14:00:00 | 1 | 2 | 1 | 1 | 2023-05-19 12:52:06.195000 |

18| 3 | 4 | 2023-04-05 08:00:00 | 3 | 1 | 2 | 9 | 2023-05-19 12:52:06.195000 |

19| 4 | 5 | 2023-04-06 10:00:00 | 1 | 2 | 1 | 3 | 2023-05-19 12:52:06.195000 |

20| 5 | 6 | 2023-04-06 12:00:00 | 2 | 2 | 1 | 1 | 2023-05-19 12:52:06.195000 |

21| 6 | 7 | 2023-04-10 18:30:00 | 2 | 1 | 2 | 11 | 2023-05-19 12:52:06.195000 |

22| 7 | 8 | 2023-04-10 18:30:00 | 3 | 1 | 2 | 2 | 2023-05-19 12:52:06.195000 |

23+---+--------+---------------------+------------+---------+-----------+----------+----------------------------+

We can see that the first delta table share_aws_dbx.sch_share.fct_trx_mng only returns the information corresponding to the partition id_client = 2 and the second delta table share_aws_dbx.sch_share.fct_trx_ext returns all the rows.

With this python script, we can see that it is extremely easy to access shared delta tables.

Example of a python script to list the delta tables of the share with the SharingClient object :

1import delta_sharing

2

3# Define the profile file (config.share)

4profile_file = "~/config.share"

5

6# Get the Client objet to managed the Sharing connexion

7client = delta_sharing.SharingClient(profile_file)

8

9print("Listing of All the Tables :")

10for element in client.list_all_tables():

11 print(" - {}".format(element))

12

13print("\nListing of Shares : ")

14l_shares = client.list_shares()

15for element in l_shares:

16 print(" - {}".format(element))

17

18print("\nListing of Schema from Share [{}] : ".format(l_shares[0].name))

19l_schemas = client.list_schemas(l_shares[0])

20for element in l_schemas :

21 print(" - {}".format(element))

22

23print("\nListing of Tables from Schema [{}] from Share [{}] : ".format(l_schemas[0].name,l_shares[0].name))

24l_tables = client.list_tables(l_schemas[0])

25for element in l_tables :

26 print(" - {}".format(element))

Script result :

1Listing of All the Tables :

2 - Table(name='fct_trx_ext', share='share_aws_dbx', schema='sch_share')

3 - Table(name='fct_trx_mng', share='share_aws_dbx', schema='sch_share')

4

5Listing of Shares :

6 - Share(name='share_aws_dbx')

7

8Listing of Schema from Share [share_aws_dbx] :

9 - Schema(name='sch_share', share='share_aws_dbx')

10

11Listing of Tables from Schema [sch_share] from Share [share_aws_dbx] :

12 - Table(name='fct_trx_ext', share='share_aws_dbx', schema='sch_share')

13 - Table(name='fct_trx_mng', share='share_aws_dbx', schema='sch_share')

Lecture des logs d’audit

We will use the same procedure as for reading the Databricks-to-Databricks Sharing audit logs.

We will only focus on the deltaSharingQueriedTable and deltaSharingQueryTable actions at the Databricks Account level for the rcp_open_all recipient to highlight some information that we can easily retrieve from the audit logs to track access to shared objects.

1-- 1. Create ctg_ext.sch_ext.audit_logs_json from JSON files

2CREATE TABLE ctg_ext.sch_ext.audit_logs_json

3USING JSON

4OPTIONS (path "s3://s3-demo-data-uc/dbx_logs/account");

5

6

7-- 2. Get deltaSharingQueryTable action informations from rcp_open_all recipient

8select requestId

9,requestParams.recipient_name

10,requestParams.share

11,requestParams.schema

12,requestParams.name

13,requestParams.user_agent

14,response.statusCode

15,serviceName

16,sourceIPAddress

17,date

18from ctg_ext.sch_ext.audit_logs_json

19where actionName ='deltaSharingQueryTable'

20and auditLevel = 'ACCOUNT_LEVEL'

21and requestParams.recipient_name in ('rcp_open_all')

22order by timestamp asc

23

24

25

26-- 2. Get information from deltaSharingQueriedTable action from rcp_open_all recipient

27select requestId

28,requestParams.recipient_name

29,response.result:numRecords

30,response.result:tableName

31,response.result:deltaSharingPartitionFilteringAccessed

32,serviceName

33,sourceIPAddress

34,userAgent

35from ctg_ext.sch_ext.audit_logs_json

36where actionName ='deltaSharingQueriedTable'

37and auditLevel = 'ACCOUNT_LEVEL'

38and requestParams.recipient_name in ('rcp_open_all')

39order by timestamp asc

Result of query n°2 for deltaSharingQueryTable actions :

1requestId,recipient_name,share,schema,name,user_agent,statusCode,serviceName,sourceIPAddress

2d59abaa0-2829-4d4b-90ea-73e9f4ec11ee,rcp_open_all,share_aws_dbx,sch_share,fct_trx_mng,Delta-Sharing-Python/0.6.4 pandas/1.3.4 PyArrow/11.0.0 Python/3.11.3 System/macOS-13.3.1-arm64-arm-64bit,200,unityCatalog,86.247.59.138

30690bef9-413d-44ec-8541-4901273aa589,rcp_open_all,share_aws_dbx,sch_share,fct_trx_ext,Delta-Sharing-Python/0.6.4 pandas/1.3.4 PyArrow/11.0.0 Python/3.11.3 System/macOS-13.3.1-arm64-arm-64bit,200,unityCatalog,86.247.59.138

This query allows us to access information from query requests executed on shared objects (recipient name, share name, schema name, table name, etc…)

Result of query n°3 for deltaSharingQueriedTable actions :

1requestId,recipient_name,numRecords,tableName,deltaSharingPartitionFilteringAccessed,serviceName,sourceIPAddress,userAgent

288dc2822-63c1-4384-b706-82ab9d5f5d9a,rcp_open_all,3,fct_trx_mng,true,unityCatalog,86.247.59.138,Delta-Sharing-Python/0.6.4 pandas/1.3.4 PyArrow/11.0.0 Python/3.11.3 System/macOS-13.3.1-arm64-arm-64bit

32be8d1b6-936c-40d9-89c1-60d104e9f50f,rcp_open_all,8,fct_trx_ext,false,unityCatalog,86.247.59.138,Delta-Sharing-Python/0.6.4 pandas/1.3.4 PyArrow/11.0.0 Python/3.11.3 System/macOS-13.3.1-arm64-arm-64bit

This query allows us to access the information of the result of the queries executed on the shared objects.

We can see that the query on the fct_trx_mng table has the deltaSharingPartitionFilteringAccessed option enabled and returns only 5 rows (which corresponds to the filter on the partitions) and that the query on the fct_trx_ext table returns 8 rows.

We can also have other information such as the ip address, the agent of the tool that executed the request (Python/pandas in our case) and many other information.

Clean environment

You will find, below, all the instructions necessary to clean the environment.

Deleting recipients (with Databricks REST API) :

1dbx-api -X DELETE ${DBX_API_URL}/api/2.1/unity-catalog/recipients/${DBX_RECIPIENT_DBX}

2dbx-api -X DELETE ${DBX_API_URL}/api/2.1/unity-catalog/recipients/${DBX_RECIPIENT_OPEN}

Deleting imported notebook (with Databricks REST API) :

1dbx-api -X POST ${DBX_API_URL}/api/2.0/workspace/delete -H 'Content-Type: application/json' -d '{"path": "/Shared/read_demo_data_nbk_scala", "recursive":"false"}'

Deleting share (with Databricks REST API) :

1# Delete Share

2dbx-api -X DELETE ${DBX_API_URL}/api/2.1/unity-catalog/shares/${DBX_SHARE_NAME}

Deleting catalog in Metastore Unity Catalog (with SQL commands) :

1-- Delete the Catalog with CASCADE option (to delete all objects)

2DROP CATALOG IF EXISTS ctg_mng CASCADE;

3DROP CATALOG IF EXISTS ctg_ext CASCADE;

Deactivating data sharing feature on the Unity Catalog Metastore (with Databricks REST API):

1# Get the Metastore ID (databricks)

2export TMP_DBX_METASTORE_ID=`dbx-api -X GET ${DBX_API_URL}/api/2.1/unity-catalog/metastores | jq -r '.metastores[]|select(.name==$ENV.DBX_METASTORE_NAME)|.metastore_id'`

3

4# Update the Metastore configuration to deactivate Delta Sharing (external)

5dbx-api -X PATCH ${DBX_API_URL}/api/2.1/unity-catalog/metastores/${TMP_DBX_METASTORE_ID} -H 'Content-Type: application/json' -d "{

6 \"delta_sharing_scope\": \"INTERNAL\"

7}"

Deleting data stored in the AWS S3 resource (with AWS CLI) :

1# Delete all files stored in the AWS S3 resource

2aws s3 rm "s3://s3-demo-data-uc/demo/" --recursive

3aws s3 rm "s3://s3-demo-data-uc/data/" --recursive

4aws s3 rm "s3://s3-demo-data-uc/dbx_logs/" --recursive

Deleting all elements in the Azure Databricks Account (with Azure CLI and Databricks REST API) :

1# Delete Metastore

2dbx-api -X DELETE ${DBX_AZ_API_URL}/api/2.1/unity-catalog/metastores/${DBZ_AZ_METASTORE_ID} -H 'Content-Type: application/json' -d '{"force": "true"}'

3

4# Delete Databricks Access Connector

5az databricks access-connector delete --resource-group ${AZ_RG_NAME} --name ${AZ_DBX_CONNECTOR_NAME}

6

7# Delete ADLS Gen2 Storage

8az storage account delete -n ${AZ_ADLS2_NAME} -g ${AZ_RG_NAME}

Note : Consider disabling audit logs configuration with Databricks REST API if this is no longer needed

Conclusion

With the Delta Sharing functionality, the Unity Catalog solution makes it possible to set up governance and data sharing in a very simple and secure way with internal (teams, subsidiaries) and external partners in order to better enhance and monetize them.

The management of shared objects can be done only with SQL commands, which makes the administration of shares and the management of access for the different recipients very simple and efficient.

Data sharing with Delta Sharing does not require the use of Databricks computing resources (Cluster or SQL Warehouse) of the provider to access shared objects. Delta Sharing makes it possible to share data, which can be voluminous, in an efficient way and by limiting costs by providing transparent access directly to data storage in a secure way (AWS S3, Azure ADLS, GCP)

This makes it possible to multiply the data usage without having to duplicate it and to be able to access it from a very large number of tools and technologies (Spark, Python, Java, Scala, PowerBI and many others in the futur)

By relying on audit logs, we have the possibility of tracking all the events related to the Metastores of the Unity Catalog solution at the account level and to be able to analyze the use of shared objects by the different recipients.

At the time of writing this document (April/May 2023), it was not yet possible to share other objects than delta tables (managed or external) and notebooks (only for Databricks-to-Databricks) but the Databricks roadmap for the next versions confirms that we will soon be able to share many more types of objects.

This functionality is still recent but should become essential in the near future for all Databricks users for the management and governance of a Data Lake or a Lakehouse in order to maximize the data usage by all partners (internal and external) with the Unity Catalog solution.